Robots are utilized in repetitive, deterministic tasks in production lines or at robotic assembly workstations to carry out a range of processes, including handling of objects, operating machinery, assembly of parts, welding, and painting process. To maintain safety and security, these systems are typically completely automated in an isolated cell that run independently and separated from the operators. Robots are known for being flexible, yet they are typically not as flexible as one might think. The use of automation requires efficient, dependable processes with high output rates. The processes should be comparable in the case of product variability so that task programs and algorithms can be modified.

High demands for product variety and diversity are driven by market needs. Due to their inflexibility, traditional automated systems cannot handle such variability, which is one of the reasons why some products are often manufactured by highly skilled individuals. Combining human workers with an automated technology allows the assembly system to have the required flexibility. Thanks to the human’s unique cognitive and sensorimotor abilities that enables the operator to complete semi-automated assembly tasks that today’s assembly technologies are unable to automate.

The experience and creative thinking of the operator allows to find a solution even for unstructured or incomplete defined tasks. In contrast, the robot can enhance the operator’s job easier by, for instance, moving heavy objects or executing tedious tasks. The efficient separation of tasks between a human and a robot boosts workplace productivity while simultaneously relieving the individual of physical and mental strain, lowering stress levels, and enhancing workplace safety avoiding any kind of physical accident or injury.[1]

The VALU3S use-case is based on a Human-Robot-Interaction (HRI) process taking place on the shop floor of a manufacturing-like environment. The process itself involves the execution of assembly tasks by human workers focusing on the assembly of transformer units which consist of multiple parts. HRI systems have to manage the coordination between humans and robots according to the safety requirements for collaborative industrial robot systems defined by several standards (e.g., ISO 10218-2:2011). VALU3S tool ensures that verification and validation of safety requirements is performed through the simulation of the use-case.

Model based failure injection

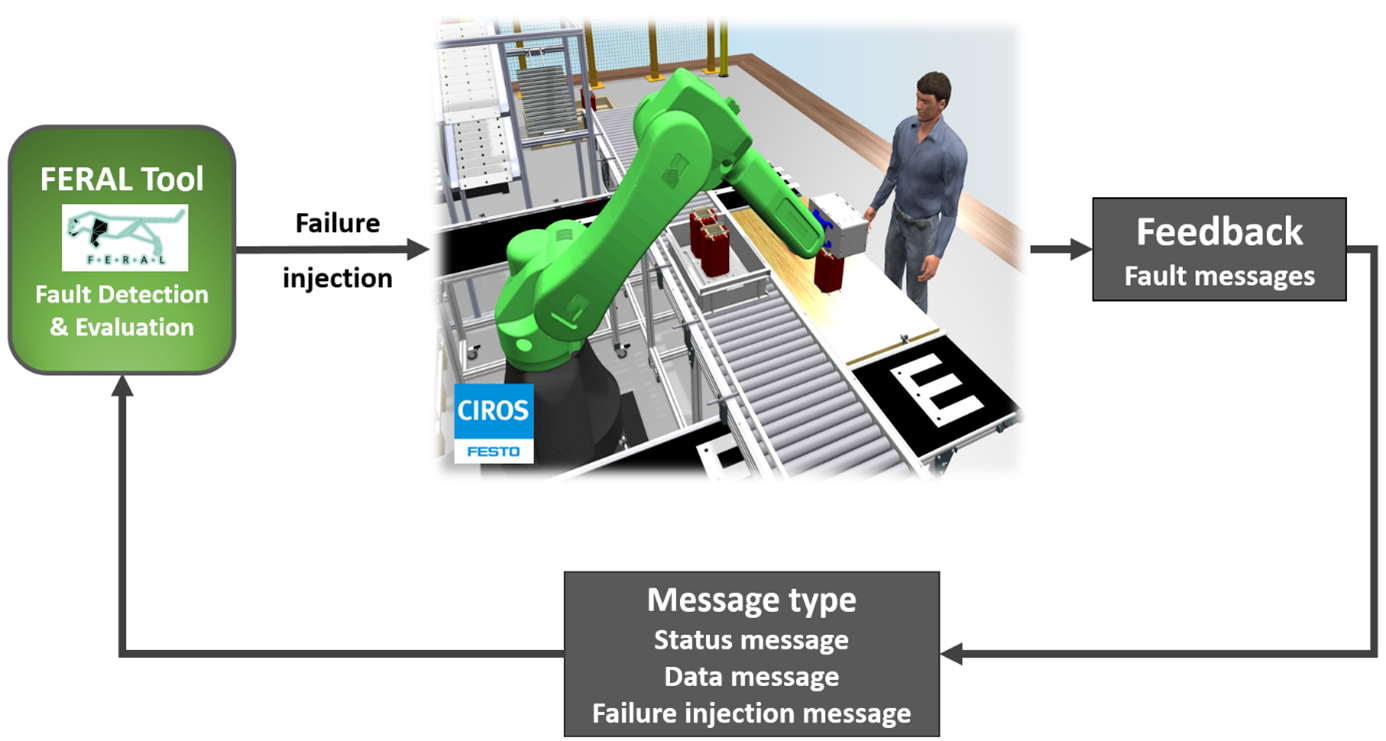

The FERAL tool is a revolutionary solution from Fraunhofer IESE for creating and evaluating architectural concepts. Virtual prototypes replace real prototypes using simulation and cost-effectively evaluate the effects of new architectural concepts. FERAL is a simulation framework consisting of different building blocks which enables the coupling of tools and integration of complex, heterogeneous scenarios into a test scenario and to specifically check properties in the protected virtual space using digital twins. [4, 5]

Using FERAL, we have implemented a closed loop fault detection and diagnosis framework in virtual semi-automated assembly process. FRAUNHOFER has been supporting the UC4 demonstrator by extending the simulation framework FERAL regarding its support for domain-specific communication protocols (here: MQTT), the connection to the 3D factory simulation tool CIROS Studio via a Python interface, and a fault injection component that enables fault injection into (1) the simulation models through MQTT communication protocol and (2) the robot simulation model within CIROS Studio as shown in Figure 1. [5]

Figure 1: FERAL integration with CIROS

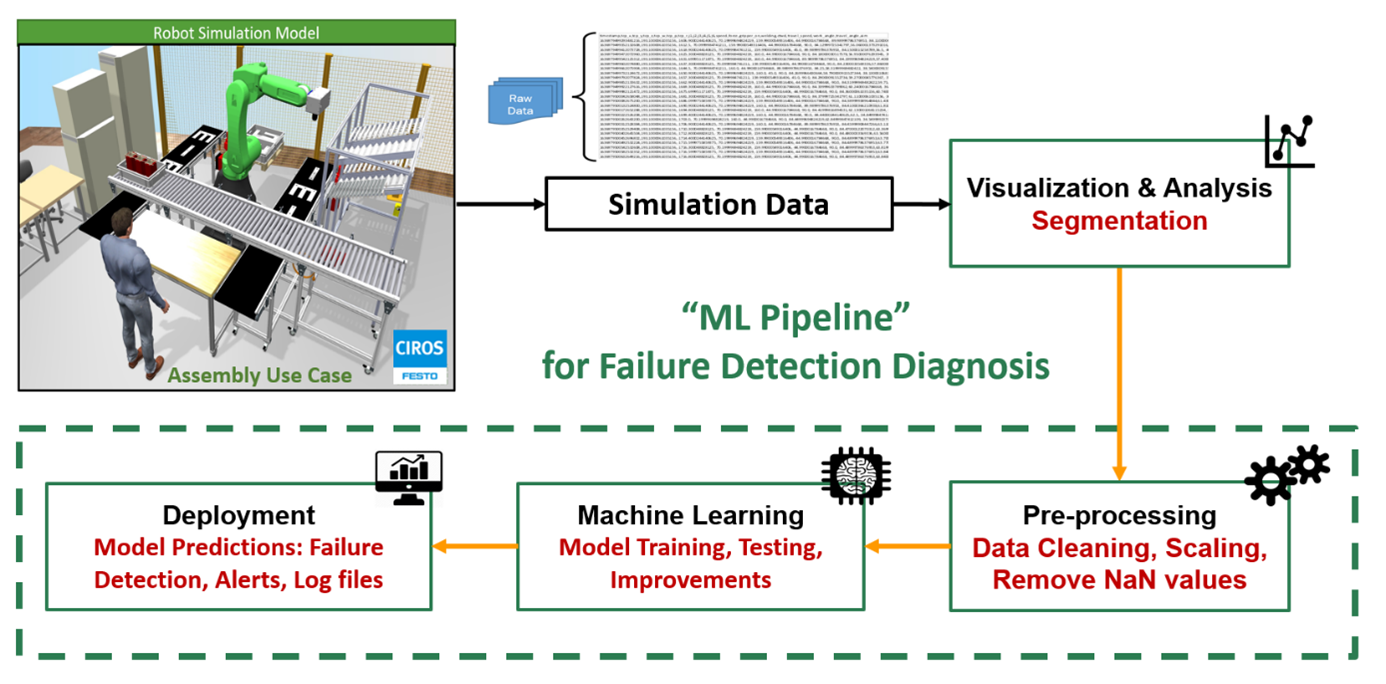

Applying a machine learning pipeline to predict and diagnose unknown faults and failures in a virtual/simulated HRC assembly process.

The early / upfront detection and diagnosis of failures in industrial use cases can have a huge impact in the advancement of performance and maintenance of equipment being used in an assembly process. The applied ML-Pipeline tool mainly focuses on predicting and detecting the occurrence of failures in advance in an assembly process. In our use case scenario, the raw data is collected from CIROS simulation while the failure detection model will be trained and deployed for failure prediction & diagnosis. The below Figure 2 shows the overall flow of the ML pipeline.

Figure 2: Machine Learning pipeline

Robot position data is collected from the CIROS simulation working in an assembly process in HRC environment. The goal is to monitor the behavior of simulation and the detection of any kind of failure before it occurs.

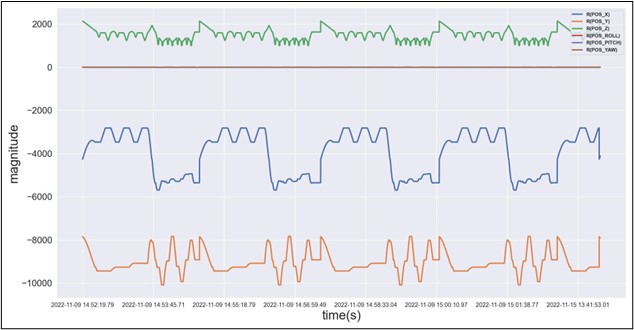

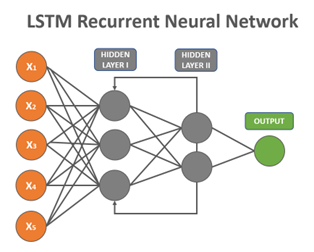

Since our data is a time series data as shown in Figure 3, deep learning model LSTM (Long Short-Term Memory) [2] is used to predict the anomalies in the assembly process. LSTM networks are a type of recurrent neural network which are capable of learning patterns or order dependence in sequential data [2].

Figure 3: Robot joint position raw data

An LSTM network as shown in Figure 4 is well-suited to learn from experience to classify, process and predict time series events when there are very long-time lags of unknown size between important events. The basic idea of failure detection with LSTM neural network is that the system looks at previous values over minutes or hours and predicts the behavior for the next predefined duration. If the predicted actual value is within the threshold, then there is no problem but if the pattern of the predicted data is higher than the threshold it will counted as a failure/anomaly and an alert will be generated to stop the assembly process. The predictions/results collected from ML model will be used for “Failure Detection and Diagnosis”.

Figure 4: Recurrent neural network

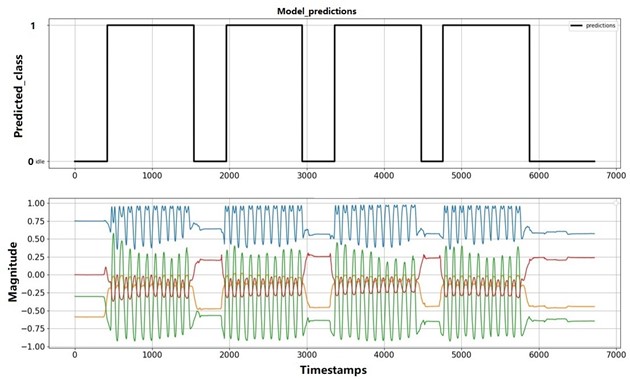

It can also be mentioned that we have gathered experience from using LSTM networks successfully in other applications e.g., for activity recognition as shown in Figure 5, predictive maintenance of machines and for sales forecast. What was common is, that in all of these projects, data has been collected from wide variety of different sources and sensors including e.g., a Fiber-Optical sensor suit and IMU based wearable sensors, machine sensors (temperature, pressure, vibration) and past sales data.[3]

Figure 5: Activity recognition

References

[1] Bolmsjö, G.; Bennulf, M.; Zhang, X.: Safety System for Industrial Robots to Support Collaboration. In: Advances in Ergonomics of Manufacturing: Managing the Enterprise of the Future. Florida, USA, 2016, p. 253 – 265. ISBN 978-3-319-19502-5

https://www.researchgate.net/publication/347201022_Human-robot_collaboration_in_assembly_processes [accessed Nov 14 2022].

[3] https://trinityrobotics.eu/use-cases/data-stream-processing-in-human-robot-collaboration/

[4] https://www.iese.fraunhofer.de/de/leistungen/digitaler-zwilling/FERAL.html

[5] Bauer, T.; Schulte-Langforth, F.; Shahwar, Z.; Bredehorst, B.: Assuring the fault tolerance of system architectures by virtual validation. Embedded world 2022 Exhibition & Conference June 2022.

Zain Shahwar received his bachelor’s degree from Air University, Islamabad in 2014 and completed his master’s degree at the Universität Bremen, Germany in 2019. Currently, he is working as robotics and data analytics engineer at PUMACY TECHNOLOGIES AG. His research interest focuses on the area of robotics simulation, machine learning, deep learning, computer vision, and data science. In addition, he is contributing to VALU3S in the research and development of verification and validation methods and tools. His research on this project focusses specifically on the safety of human and industrial robots in a semi-automated assembly process.

Dr.-Ing. Bernd Bredehorst, holds a master degree and PhD in Production Engineering. He has 6+ years’ experience working in a research institute in applied engineering science in various national and transnational projects. Afterwards he joined PUMACY working in Knowledge Management and Data Analytics and gained 15+ years’ experience in business development, consulting, solution implementation and project management within in various branches while heading department and leading multiple teams.

Dr. Thomas Bauer is business area manager for automotive and mobility and project manager in the virtual engineering department at the Fraunhofer Institute for Experimental Software Engineering IESE in Kaiserslautern (Germany). His research interests include the design of large-scale system architectures and the verification and validation of technical, software-intensive systems. In recent years he has focused on the usage of virtualization and digital twins for system design and validation. Dr. Bauer has been led various strategic research projects in the area of model-based quality assurance of safety-critical software-intensive systems and manifold industry and consulting projects with companies from the automotive and manufacturing domain.

Felix Schulte-Langforth is working as research assistant at the virtual engineering department (VE) of the Fraunhofer Institute for Experimental Software Engineering IESE. His research interest focuses on the area of development and evaluation of architecture concepts, as well the area of virtual prototyping. His contribution to VALU3S is the research and development of verification and validation methods with the simulation and virtual validation framework FERAL. Mr. Schulte-Langforth worked in various research and industry projects with the focus on virtual prototyping, virtual evaluation and the coupling and execution of simulators and simulation models.